-

AI Disclosures

Transaprent AI disclosures, Article 52, obligations, trustworthiness, qualitative, law

Transparent AI Disclosure Obligations: Who, What, When, Where, Why, How

2023-2024

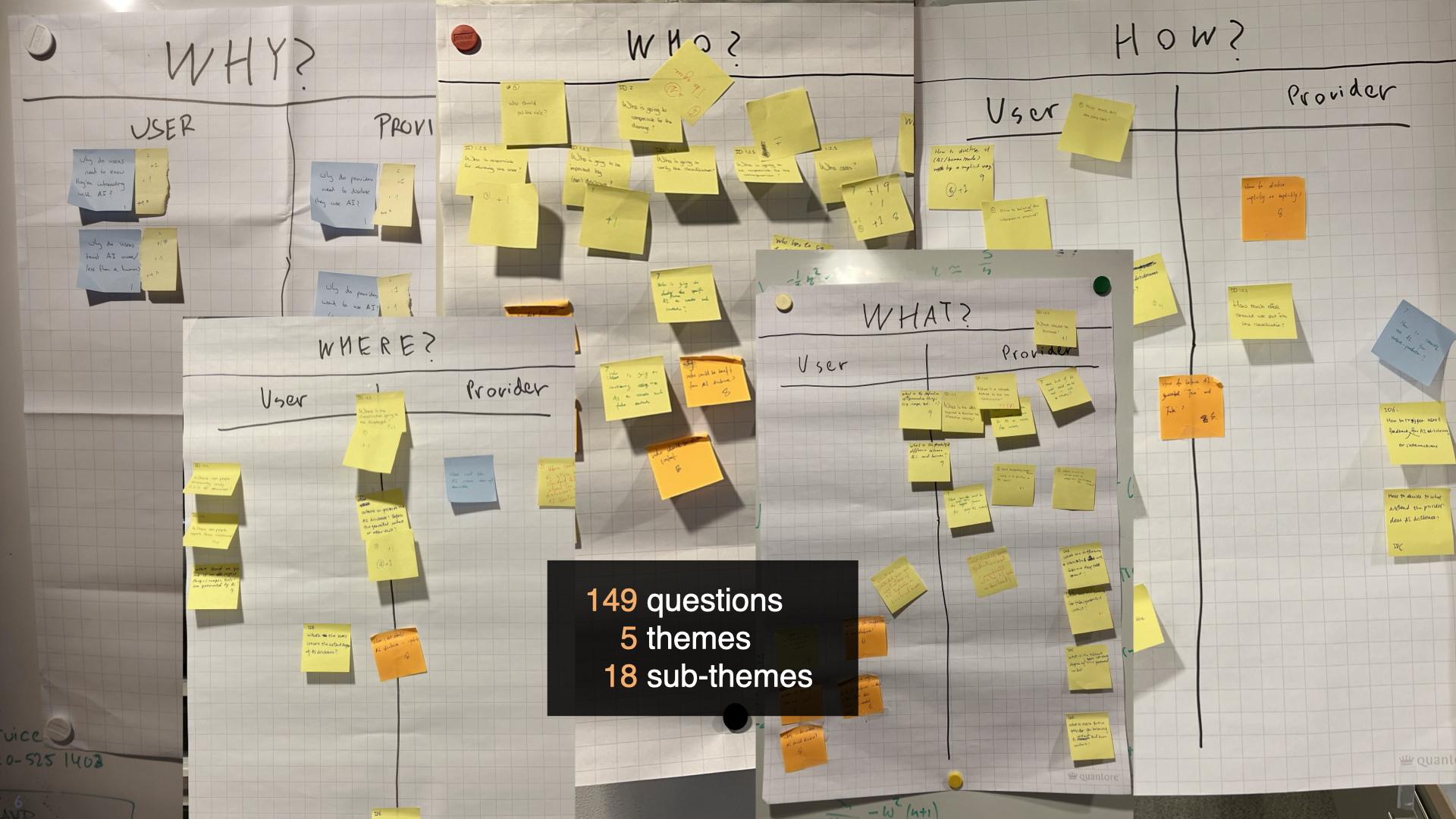

Advances in Generative Artificial Intelligence (AI) are resulting in AI-generated media output that is (nearly) indistinguishable from human-created content. This can drastically impact users and the media sector, especially given global risks of misinformation. While the currently discussed European AI Act aims at addressing these risks through Article 52's AI transparency obligations, its interpretation and implications remain unclear. In this early work, we adopt a participatory AI approach to derive key questions based on Article 52's disclosure obligations. We ran two workshops with researchers, designers, and engineers across disciplines (N=16), where participants deconstructed Article 52's relevant clauses using the 5W1H framework. We contribute a set of 149 questions clustered into five themes and 18 sub-themes. We believe these can not only help inform future legal developments and interpretations of Article 52, but also provide a starting point for Human-Computer Interaction research to (re-)examine disclosure transparency from a human-centered AI lens.

-

El Ali, A., Venkatraj, K., Morosoli, S., Naudts, L., Helberger, N., and Cesar, P. (2024). Transparent AI Disclosure Obligations: Who, What, When, Where, Why, How. To be published in Proc. CHI EA ’24. doi (arXiv)

-

GenAI for UXD

Generative AI, User Experience Design, user perceptions, interviews

Understanding Generative AI for User Experience Design

2023-2024

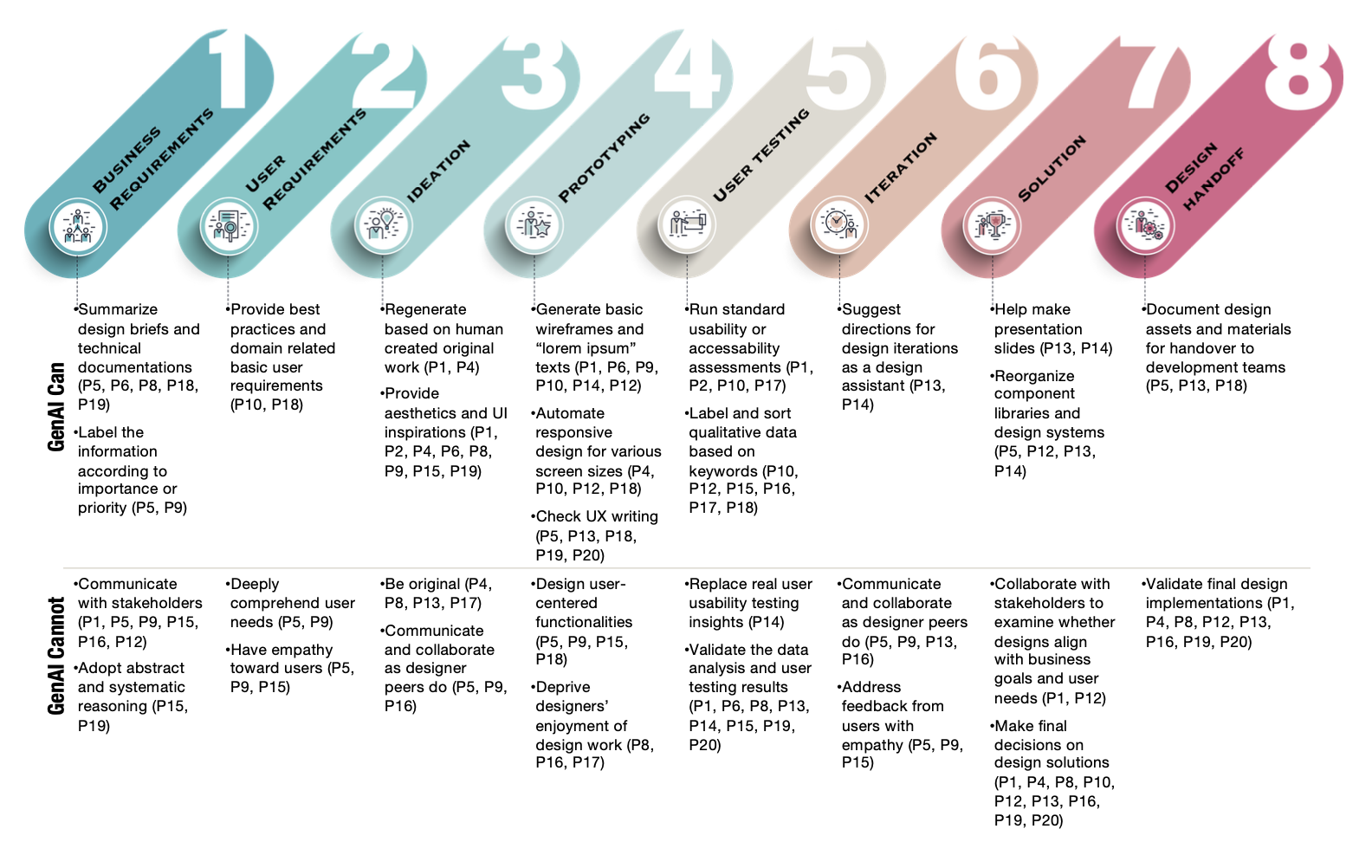

Among creative professionals, Generative Artificial Intelligence (GenAI) has sparked excitement over its capabilities and fear over unanticipated consequences. How does GenAI impact User Experience Design (UXD) practice, and are fears warranted? We interviewed 20 UX Designers, with diverse experience and across companies (startups to large enterprises). We probed them to characterize their practices, and sample their attitudes, concerns, and expectations. We found that experienced designers are confident in their originality, creativity, and empathic skills, and find GenAI's role as assistive. They emphasized the unique human factors of "enjoyment" and "agency", where humans remain the arbiters of "AI alignment". However, skill degradation, job replacement, and creativity exhaustion can adversely impact junior designers. We discuss implications for human-GenAI collaboration, specifically copyright and ownership, human creativity and agency, and AI literacy and access. Through the lens of responsible and participatory AI, we contribute a deeper understanding of GenAI fears and opportunities for UXD.

-

Li, J., Cao, H., Lin, L., Hou, Y., Zhu, R., and El Ali, A. (2024). User Experience Design Professionals’ Perceptions of Generative Artificial Intelligence. To be published in Proc. CHI ’24. doi (arXiv)

-

ShareYourReality

Avatar co-embodiment, perceptual crossing, haptics, agency

Haptics in Virtual Avatar Co-embodiment

2023-2024

Virtual co-embodiment enables two users to share a single avatar in Virtual Reality (VR). During such experiences, the illusion of shared motion control can break during joint-action activities, highlighting the need for position-aware feedback mechanisms. Drawing on the perceptual crossing paradigm, we explore how haptics can enable non-verbal coordination between co-embodied participants. In a within-subjects study (20 participant pairs), we examined the effects of vibrotactile haptic feedback (None, Present) and avatar control distribution (25-75%, 50-50%, 75-25%) across two VR reaching tasks (Targeted, Free-choice) on participants’ Sense of Agency (SoA), co-presence, body ownership, and motion synchrony. We found (a) lower SoA in the free-choice with haptics than without, (b) higher SoA during the shared targeted task, (c) co-presence and body ownership were significantly higher in the free-choice task, (d) players’ hand motions synchronized more in the targeted task. We provide cautionary considerations when including haptic feedback mechanisms for avatar co-embodiment experiences.

-

Venkatraj, K., Meijer, W., Perusquía-Hernández, M., Huisman, G., and El Ali, A. (2024). ShareYourReality: Investigating Haptic Feedback and Agency in Virtual Avatar Co-embodiment. To be published in Proc. CHI ’24. doi (arXiv)

-

CardioceptionVR

Interoception, cardiac, virtual reality, heart rate, interoceptive accuracy

Cardiac Interoception in Virtual Reality

2023

Measuring interoception (‘perceiving internal bodily states’) has diagnostic and wellbeing implications. Since heartbeats are distinct and frequent, various methods aim at measuring cardiac interoceptive accuracy (CIAcc). However, the role of exteroceptive modalities for representing heart rate (HR) across screen-based and Virtual Reality (VR) environments remains unclear. Using a PolarH10 HR monitor, we develop a modality-dependent cardiac recognition task that modifies displayed HR. In a mixed-factorial design (N=50), we investigate how task environment (Screen, VR), modality (Audio, Visual, Audio-Visual), and real-time HR modifications (±15%, ±30%, None) influence CIAcc, interoceptive awareness, mind-body measures, VR presence, and post-experience responses. Findings showed that participants confused their HR with underestimates up to 30%; environment did not affect CIAcc but influenced mind-related measures; modality did not influence CIAcc, however including audio increased interoceptive awareness; and VR presence inversely correlated with CIAcc. We contribute a lightweight and extensible cardiac interoception measurement method, and implications for biofeedback displays.

-

BreatheWithMe

Breathing, visualization, vibrotactile, social breath awareness, collaborative tasks, synchrony

BreatheWithMe: Multimodal, Social Breath Awareness

2022-2023

Sharing breathing signals has the capacity to provide insights into hidden experiences and enhance interpersonal communication. However, it remains unclear how the modality of breath signals (visual, haptic) is socially interpreted during collaborative tasks. In this mixed-methods study, we design and evaluate BreatheWithMe, a prototype for real-time sharing and receiving of breathing signals through visual, vibrotactile, or visual-vibrotactile modalities. In a within-subjects study (15 pairs), we investigated the effects of modality on breathing synchrony, social presence, and overall user experience. Key findings showed: (a) there were no significant effects of visualization modality on breathing synchrony, only on deliberate music-driven synchronization; (b) visual modality was preferred over vibrotactile feedback, despite no differences across social presence dimensions; (c) BreatheWithMe was perceived to be an insightful window into others, however included data exposure and social acceptability concerns. We contribute insights into the design of multi-modal real-time breathing visualization systems for colocated, collaborative tasks.

-

El Ali, A., Stepanova, R. E., Palande, S., Mader, A., Cesar, P., and Jansen, K. (2023). BreatheWithMe: Exploring Visual and Vibrotactile Displays for Social Breath Awareness during Colocated, Collaborative Tasks. To be published in Proc. CHI EA ’23. preprint

-

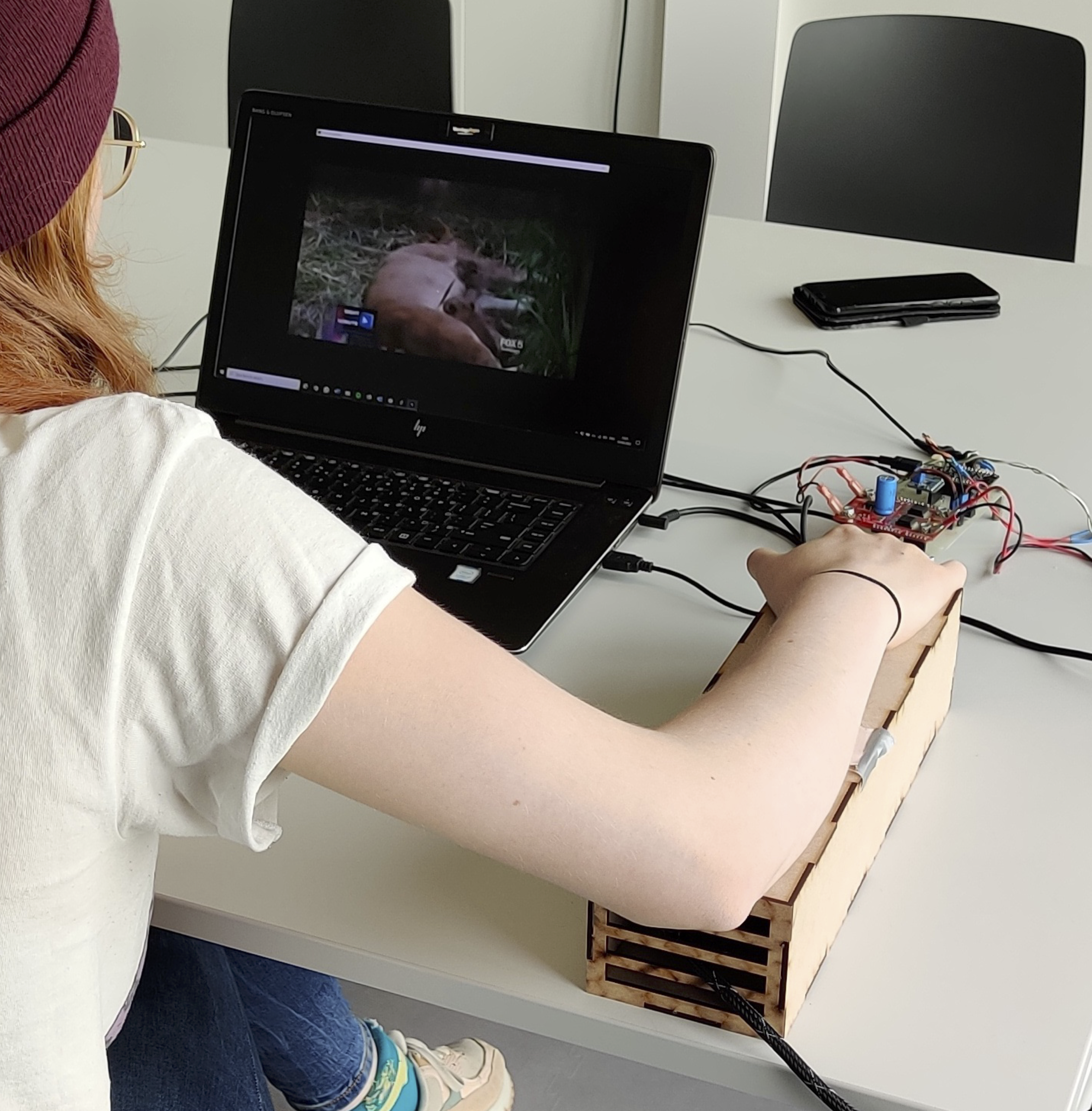

FeelTheNews

Affective haptics, thermal, vibrotactile, news, video

FeelTheNews: Affective Haptics for News Videos

2022-2023

Emotion plays a key role in the emerging wave of immersive, multi-sensory audience news engagement experiences. Since emotions can be triggered by somatosensory feedback, in this work we explore how augmenting news video watching with haptics can influence affective perceptions of news. Using a mixed-methods approach, we design and evaluate FeelTheNews, a prototype that combines vibrotactile and thermal stimulation (Matching, 70Hz/20°C, 200Hz/40°C) during news video watching. In a within-subjects study (N=20), we investigate the effects of haptic stimulation and video valence on perceived valence, emotion intensity, comfort, and overall haptic experiences. Findings showed: (a) news valence and emotion intensity ratings were not affected by haptics, (b) no stimulation was more comfortable than including stimulation, (c) attention and engagement with the news can override haptic sensations, and (d) users' perceived agency over their reactions is critical to avoid distrust. We contribute cautionary insights for haptic augmentation of the news watching experience.

-

Ooms, S., Lee, M., Cesar, P., and El Ali, A. (2023). FeelTheNews: Augmenting Affective Perceptions of News Videos with Thermal and Vibrotactile Stimulation. To be published in Proc. CHI EA ’23. preprint

-

Avatar Biosignals

Biosignals, social VR, visualization, entertainment, perception

Avatar Biosignal Visualizations in Social Virtual Reality

2021-2022

Visualizing biosignals can be important for social Virtual Reality (VR), where avatar non-verbal cues are missing. While several biosignal representations exist, designing effective visualizations and understanding user perceptions within social VR entertainment remains unclear. We adopt a mixed-methods approach to design biosignals for social VR entertainment. Using survey (N=54), context-mapping (N=6), and co-design (N=6) methods, we derive four visualizations. We then ran a within-subjects study (N=32) in a virtual jazz-bar to investigate how heart rate (HR) and breathing rate (BR) visualizations, and signal rate, influence perceived avatar arousal, user distraction, and preferences. Findings show that skeuomorphic visualizations for both biosignals allow differentiable arousal inference; skeuomorphic and particles were least distracting for HR, whereas all were similarly distracting for BR; biosignal perceptions often depend on avatar relations, entertainment type, and emotion inference of avatars versus spaces. We contribute HR and BR visualizations, and considerations for designing social VR entertainment biosignal visualizations.

-

Lee, S., El Ali, A., Wijntjes, M., & Cesar, P. (2022). Understanding and Designing Avatar Biosignal Visualizations for Social Virtual Reality Entertainment. To be published in Proc. CHI ’22. doi

-

El Ali, A., Lee, S., & Cesar, P. (2022). Social Virtual Reality Avatar Biosignal Animations as Availability Status Indicators. Position paper. To be presented at CHI 2022 Workshop "Social Presence in Virtual Event Spaces".

-

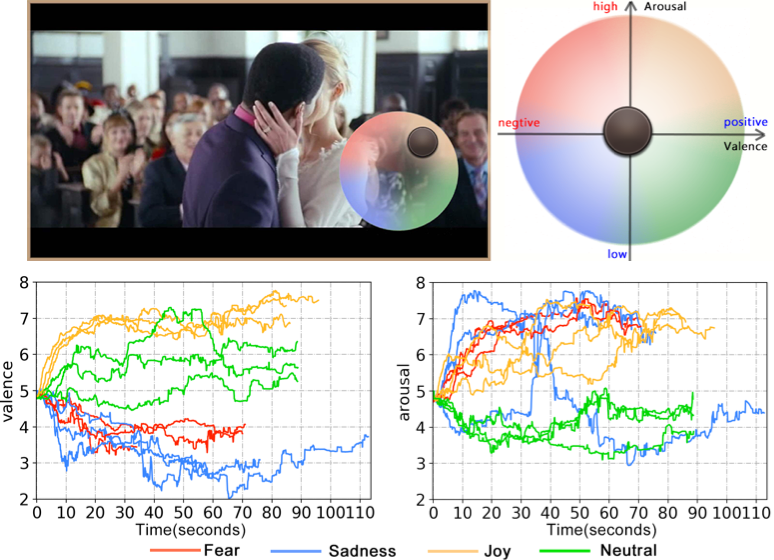

RCEA-360VR

Emotion, annotation, 360° video, virtual reality, ground truth, labels, viewport-dependent, real-time, continuous

Real-time, Continuous Emotion Annotation for 360° VR Videos

2019-2021

Precise emotion ground truth labels for 360° virtual reality (VR) video watching are essential for fine-grained predictions under varying viewing behavior. However, current annotation techniques either rely on post-stimulus discrete self-reports, or real-time, con- tinuous emotion annotations (RCEA) but only for desktop/mobile settings. We present RCEA for 360° VR videos (RCEA-360VR), where we evaluate in a controlled study (N=32) the usability of two peripheral visualization techniques: HaloLight and DotSize. We furthermore develop a method that considers head movements when fusing labels. Using physiological, behavioral, and subjective measures, we show that (1) both techniques do not increase users’ workload, sickness, nor break presence (2) our continuous valence and arousal annotations are consistent with discrete within-VR and original stimuli ratings (3) users exhibit high similarity in viewing behavior, where fused ratings perfectly align with intended labels. Our work contributes usable and effective techniques for collecting fine-grained viewport-dependent emotion labels in 360° VR.

-

Xue, T., El Ali, A., Ding, G., & Cesar, P. (2020). Annotation Tool for Precise Emotion Ground Truth Label Acquisition While Watching 360-degree VR Videos. In Proc. AIVR '20 Demos.

-

Xue, T., El Ali, A., Ding, G., & Cesar, P. (2021). Investigating the Relationship between Momentary Emotion Self-reports and Head and Eye Movements in HMD-based 360° VR Video Watching. To be published in Proc CHI EA '21. preprint

-

Xue, T., El Ali, A., Zhang, T., Ding, G., & Cesar, P. (2021). RCEA-360VR: Real-time, Continuous Emotion Annotation in 360° VR Videos for Collecting Precise Viewport-dependent Ground Truth Labels. To be published in Proc CHI '21. preprint source code

-

Xue, T., El Ali, A., Zhang, T., Ding, G., & Cesar, P. (2021). CEAP-360VR: A Continuous Physiological and Behavioral Emotion Annotation Dataset for 360° Videos. In IEEE Transactions on Multimedia. doi preprint dataset webpage data + code

-

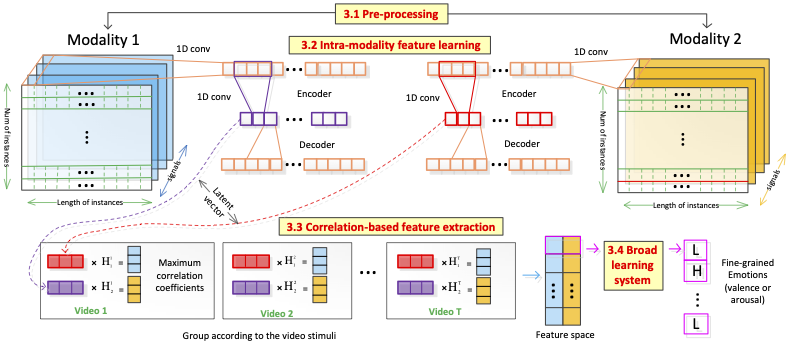

CorrNet

Emotion recognition, video, physiological signals, machine learning

Fine-Grained Emotion Recognition with Physio Signals

2019-2022

Recognizing user emotions while they watch short-form videos anytime and anywhere is essential for facilitating video content customization and personalization. However, most works either classify a single emotion per video stimuli, or are restricted to static, desktop environments. To address this, we propose a correlation-based emotion recognition algorithm (CorrNet) to recognize the valence and arousal (V-A) of each instance (fine-grained segment of signals) using only wearable, physiological signals (e.g., electrodermal activity, heart rate). CorrNet takes advantage of features both inside each instance (intra-modality features) and between different instances for the same video stimuli (correlation-based features). We first test our approach on an indoor-desktop affect dataset (CASE), and thereafter on an outdoor-mobile affect dataset (MERCA) which we collected using a smart wristband and wearable eyetracker. Results show that for subject-independent binary classifi- cation (high-low), CorrNet yields promising recognition accuracies: 76.37% and 74.03% for V-A on CASE, and 70.29% and 68.15% for V-A on MERCA. Our findings show: (1) instance segment lengths between 1–4 s result in highest recognition accuracies (2) accuracies between laboratory-grade and wearable sensors are comparable, even under low sampling rates (≤64 Hz) (3) large amounts of neu- tral V-A labels, an artifact of continuous affect annotation, result in varied recognition performance.

-

Zhang, T., El Ali, A., Wang, C., Hanjalic, A., & Cesar, P. (2021). CorrNet: Fine-Grained Emotion Recognition for Video Watching Using Wearable Physiological Sensors. In Sensors 2021, 21(1), 52. doi (open access)

-

Zhang, T., El Ali, A., Hanjalic, A., & Cesar, P. (2022). Few-shot Learning for Fine-grained Emotion Recognition using Physiological Signals. To be published in IEEE Transactions on Multimedia.

-

ThermalWear

Thermal, affect, emotion, voice, prosody, wearable, display, chest

ThermalWear: Augmenting Voice Messages with Affect

2019-2020

Voice is a rich modality for conveying emotions, however emotional prosody production can be situationally or medically impaired. Since thermal displays have been shown to evoke emotions, we explore how thermal stimulation can augment perception of neutrally-spoken voice messages with affect. We designed ThermalWear, a wearable on-chest thermal display, then tested in a controlled study (N=12) the effects of fabric, thermal intensity, and direction of change. Thereafter, we synthesized 12 neutrally-spoken voice messages, validated (N=7) them, then tested (N=12) if thermal stimuli can augment their perception with affect. We found warm and cool stimuli (a) can be perceived on the chest, and quickly without fabric (4.7-5s) (b) do not incur discomfort (c) generally increase arousal of voice messages and (d) increase / decrease message valence, respectively. We discuss how thermal displays can augment voice perception, which can enhance voice assistants and support individuals with emotional prosody impairments.

-

El Ali, A., Yang, X., Ananthanarayan, S., Röggla, T., Jansen, J., Hartcher-O’Brien, J., Jansen, K. & Cesar, P. (2020). ThermalWear: Exploring Wearable On-chest Thermal Displays to Augment Voice Messages with Affect. In Proc. CHI ’20. doi pdf source code video

-

RCEA

Emotion; annotation; mobile; video; real-time; continuous; labels

Real-time, Continuous Emotion Annotation for Mobile Video

2019-2020

Collecting accurate and precise emotion ground truth labels for mobile video watching is essential for ensuring meaningful predictions. However, video-based emotion annotation techniques either rely on post-stimulus discrete self-reports, or allow real-time, continuous emotion annotations (RCEA) only for desktop settings. Following a user-centric approach, we designed an RCEA technique for mobile video watching, and validated its usability and reliability in a controlled, indoor (N=12) and later outdoor (N=20) study. Drawing on physiological measures, interaction logs, and subjective workload reports, we show that (1) RCEA is perceived to be usable for annotating emotions while mobile video watching, without increasing users’ mental workload (2) the resulting time-variant annotations are comparable with intended emotion attributes of the video stimuli (classification error for valence: 8.3%; arousal: 25%). We contribute a validated annotation technique and associated annotation fusion method, that is suitable for collecting fine-grained emotion annotations while users watch mobile videos.

-

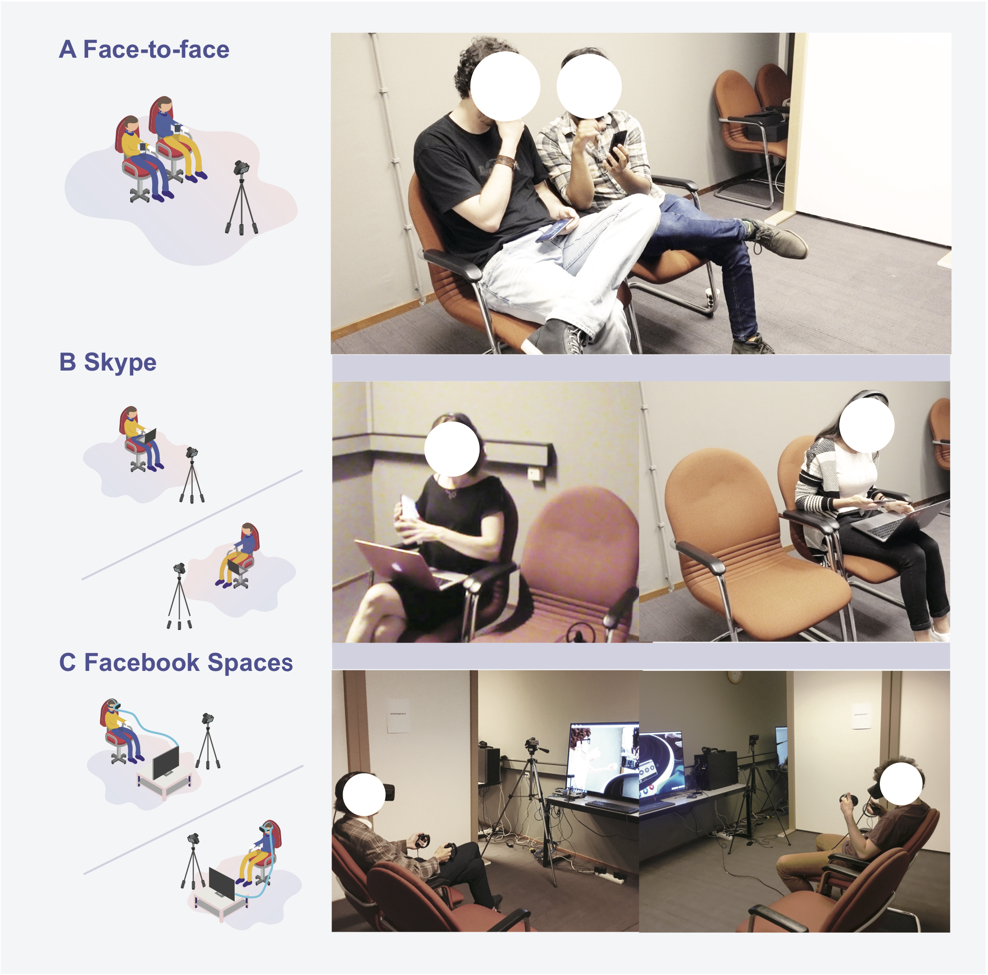

Measure Social VR

Social virtual reality, photo sharing, questionnaire, immersion, presence

Measuring Experiences in Social VR

2018-2019

Millions of photos are shared online daily, but the richness of interaction compared with face-to-face (F2F) sharing is still missing. While this may change with social Virtual Reality (socialVR), we still lack tools to measure such immersive and interactive experiences. In this paper, we investigate photo sharing experiences in immersive environments, focusing on socialVR. Running context mapping (N=10), an expert creative session (N=6), and an online experience clustering questionnaire (N=20), we develop and statistically evaluate a questionnaire to measure photo sharing experiences. We then ran a controlled, within-subject study (N=26 pairs) to compare photo sharing under F2F, Skype, and Facebook Spaces. Using interviews, audio analysis, and our question- naire, we found that socialVR can closely approximate F2F sharing. We contribute empirical findings on the immersiveness differences between digital communication media, and propose a socialVR questionnaire that can in the future generalize beyond photo sharing.

-

Li, J., Kong, Y., Röggla, T., De Simone, F., Ananthanarayan, S., de Ridder, H., El Ali, A., & Cesar, P. (2019). Measuring and Understanding Photo Sharing Experiences in Social Virtual Reality. In Proc. CHI '19. doi pdf Social VR Questionnaires video

-

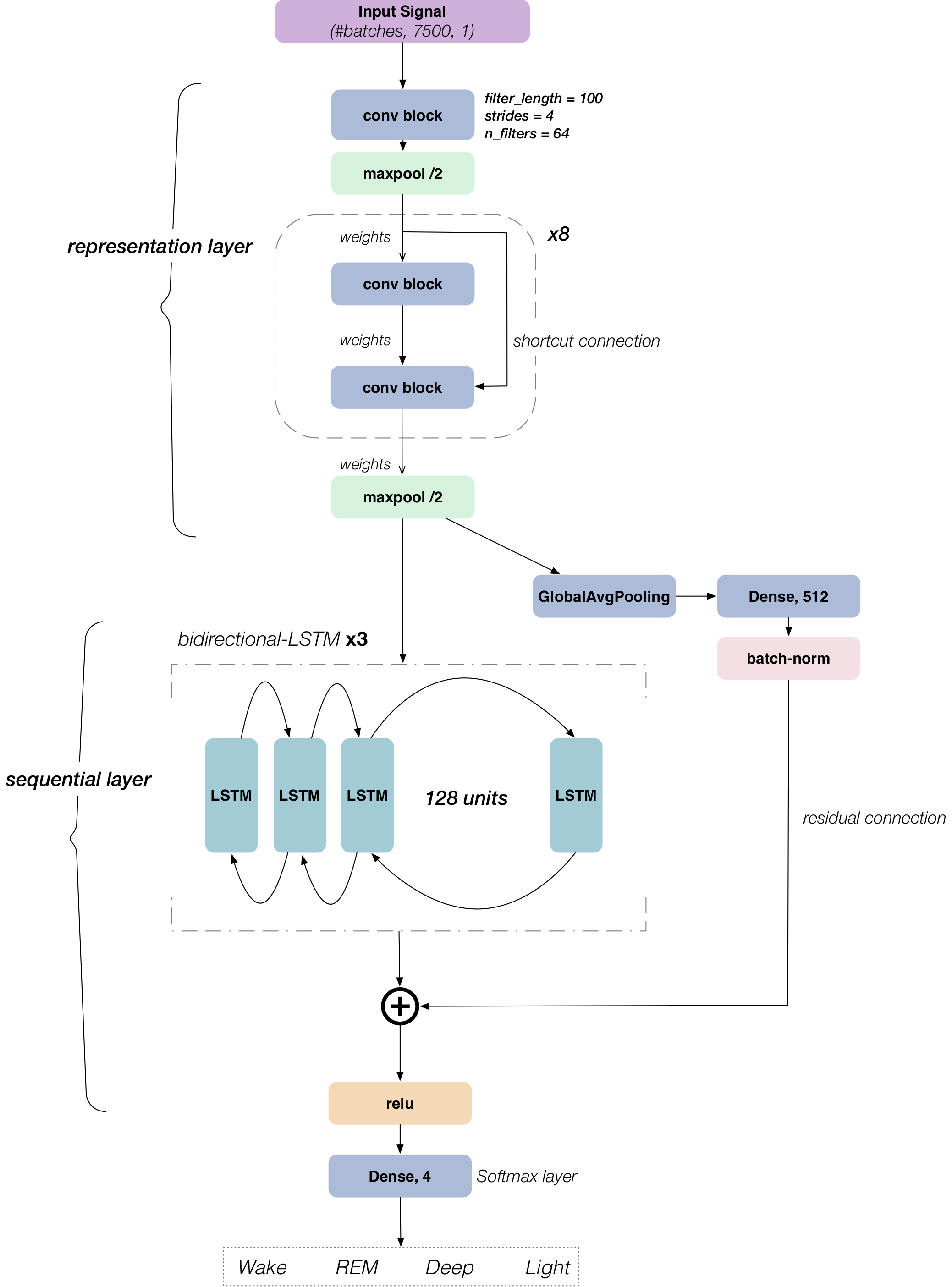

DeepSleep

Ballistocardiography, deep learning, sleep classification

DeepSleep: Classifying Sleep Stages

2018-2019

Current techniques for tracking sleep are either obtrusive (Polysomnography) or low in accuracy (wearables). In this early work, we model a sleep classification system using an unobtrusive Ballistocardiographic (BCG)-based heart sensor signal collected from a commercially available pressure- sensitive sensor sheet. We present DeepSleep, a hybrid deep neural network architecture comprising of CNN and LSTM layers. We further employed a 2-phase training strategy to build a pre-trained model and to tackle the limited dataset size. Our model results in a classification accuracy of 74%, 82%, 77% and 63% using Dozee BCG, MIT-BIH’s ECG, Dozee’s ECG and Fitbit’s PPG datasets, respectively. Furthermore, our model shows a positive correlation (r = 0.43) with the SATED perceived sleep quality scores. We show that BCG signals are effective for long-term sleep monitoring, but currently not suitable for medical diagnostic purposes.

-

Rao, S., El Ali, A., & Cesar, P. (2019). DeepSleep: A Ballistocardiographic Deep Learning Approach for Classifying Sleep Stages. In ICT.OPEN 2019. [2nd poster prize award]

-

Detect News Sympathy

Twitter, sentiment analysis, crowdsourcing, machine learning

Measuring & Classifying News Media Sympathy

2017-2018

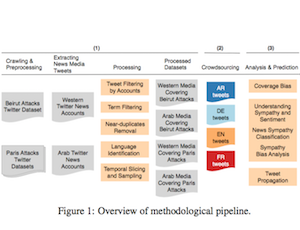

This paper investigates bias in coverage between Western and Arab media on Twitter after the November 2015 Beirut and Paris terror attacks. Using two Twitter datasets covering each attack, we investigate how Western and Arab media differed in coverage bias, sympathy bias, and resulting information propagation. We crowdsourced sympathy and sentiment labels for 2,390 tweets across four languages (English, Arabic, French, German), built a regression model to characterize sympathy, and thereafter trained a deep convolutional neural network to predict sympathy. Key findings show: (a) both events were disproportionately covered (b) Western media exhibited less sympathy, where each media coverage was more sympathetic towards the country affected in their respective region (c) Sympathy predictions supported ground truth analysis that Western media was less sympathetic than Arab media (d) Sympathetic tweets do not spread any further. We discuss our results in light of global news flow, Twitter affordances, and public perception impact.

-

El Ali, A., Stratmann, T., Park, S., Schöning, J., Heuten, W. & Boll, S. (2018). Measuring, Understanding, and Classifying News Media Sympathy on Twitter after Crisis Events. To be published in Proc. CHI '18. Montréal, Canada. pdf bib

@inproceedings{Elali2018, title = {Measuring, Understanding, and Classifying News Media Sympathy on Twitter after Crisis Events}, author = {Abdallah El Ali and Tim C Stratmann and Souneil Park and Johannes Sch{\"o}ning and Wilko Heuten and Susanne CJ Boll}, booktitle = {Proceedings of the International Conference on Human Factors in Computing Systems 2018}, series = {CHI '18}, year = {2018}, location = {Montreal, Canada}, pages = {#-#}, url = {https://doi.org/10.1145/3173574.3174130} }

-

Face2Emoji

Face2Emoji, emoji, crowdsourcing, emotion recognition, facial expression, input, keyboard, text entry

Face2Emoji

2016-2017

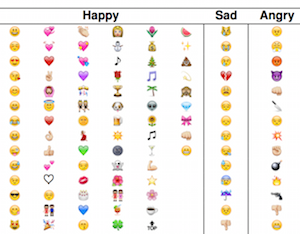

One way to indicate nonverbal cues is by sending emoji (e.g., 😂 ), which requires users to make a selection from large lists. Given the growing number of emojis, this can incur user frustration, and instead we propose Face2Emoji, where we use a user's facial emotional expression to filter out the relevant set of emoji by emotion category. To validate our method, we crowdsourced 15,155 emoji to emotion labels across 308 website visitors, and found that our 202 tested emojis can indeed be classified into seven basic (including Neutral) emotion categories. To recognize facial emotional expressions, we use deep convolutional neural networks, where early experiments show an overall accuracy of 65% on the FER-2013 dataset. We discuss our future research on Face2Emoji, addressing how to improve our model performance, what type of usability test to run with users, and what measures best capture the usefulness and playfulness of our system.

-

El Ali, A., Wallbaum, T., Wasmann, M., Heuten, W. & Boll, S. (2017). Face2Emoji: Using Facial Emotional Expressions to Filter Emojis. In Proc. CHI '17 EA. Denver, CO, USA. pdf doi bib

@inproceedings{ElAli:2017:FUF:3027063.3053086, author = {El Ali, Abdallah and Wallbaum, Torben and Wasmann, Merlin and Heuten, Wilko and Boll, Susanne CJ}, title = {Face2Emoji: Using Facial Emotional Expressions to Filter Emojis}, booktitle = {Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems}, series = {CHI EA '17}, year = {2017}, isbn = {978-1-4503-4656-6}, location = {Denver, Colorado, USA}, pages = {1577--1584}, numpages = {8}, url = {http://doi.acm.org/10.1145/3027063.3053086}, doi = {10.1145/3027063.3053086}, acmid = {3053086}, publisher = {ACM}, address = {New York, NY, USA}, keywords = {crowdsourcing, emoji, emotion recognition, face2emoji, facial expression, input, keyboard, text entry}, }

-

Wayfinding Strategies

HCI4D, ICT4D, Lebanon, navigation, wayfinding, mapping services, giving directions, addressing, strategies

HCI4D Wayfinding Strategies

2015-2016

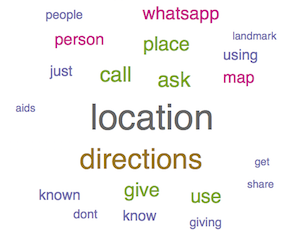

While HCI for development (HCI4D) research has typically focused on technological practices of poor and low-literate communities, little research has addressed how technology literate individuals living in a poor infrastructure environment use technology. Our work fills this gap by focusing on Lebanon, a country with longstanding political instability, and the wayfinding issues there stemming from missing street signs and names, a poor road infrastructure, and a non-standardized addressing system. We examine the relationship between technology literate individuals' navigation and direction giving strategies and their usage of current digital navigation aids. Drawing on an interview study (N=12) and a web survey (N=85), our findings show that while these individuals rely on mapping services and WhatsApp's share location feature to aid wayfinding, many technical and cultural problems persist that are currently resolved through social querying.

-

El Ali, A., Bachour, K., Heuten, W. & Boll, S. (2016). Technology Literacy in Poor Infrastructure Environments: Characterizing Wayfinding Strategies in Lebanon. In Proc. MobileHCI '16. Florence, Italy. pdf doi bib

@inproceedings{ElAli:2016:TLP:2935334.2935352, author = {El Ali, Abdallah and Bachour, Khaled and Heuten, Wilko and Boll, Susanne}, title = {Technology Literacy in Poor Infrastructure Environments: Characterizing Wayfinding Strategies in Lebanon}, booktitle = {Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services}, series = {MobileHCI '16}, year = {2016}, isbn = {978-1-4503-4408-1}, location = {Florence, Italy}, pages = {266--277}, numpages = {12}, url = {http://doi.acm.org/10.1145/2935334.2935352}, doi = {10.1145/2935334.2935352}, acmid = {2935352}, publisher = {ACM}, address = {New York, NY, USA}, keywords = {HCI4D, ICT4D, Lebanon, addressing, giving directions, mapping services, mobile, navigation, strategies, wayfinding}, }

-

VapeTracker

E-cigarettes, vaping, tracking, sensors, behavior change technology

VapeTracker

2015-2016

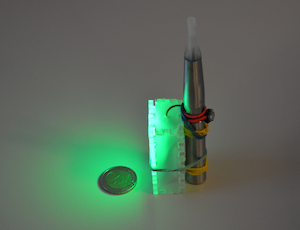

Despite current controversy over e-cigarettes as a smoking cessation aid, we present early work based on a web survey (N=249) that shows that some e-cigarette users (46.2%) want to quit altogether, and that behavioral feedback that can be tracked can fulfill that purpose. Based on our survey findings, we designed VapeTracker, an early prototype that can attach to any e-cigarette device to track vaping activity. Currently, we are exploring how to improve our VapeTracker prototype using ambient feedback mechanisms, and how to account for behavior change models to support quitting e-cigarettes.

-

El Ali, A., Matviienko, A., Feld, Y., Heuten, W. & Boll, S. (2016). VapeTracker: Tracking Vapor Consumption to Help E-cigarette Users Quit. In Proc. CHI '16 EA. San Jose, CA, USA. pdf doi bib

@inproceedings{ElAli:2016:VTV:2851581.2892318, author = {El Ali, Abdallah and Matviienko, Andrii and Feld, Yannick and Heuten, Wilko and Boll, Susanne}, title = {VapeTracker: Tracking Vapor Consumption to Help E-cigarette Users Quit}, booktitle = {Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems}, series = {CHI EA '16}, year = {2016}, isbn = {978-1-4503-4082-3}, location = {San Jose, California, USA}, pages = {2049--2056}, numpages = {8}, url = {http://doi.acm.org/10.1145/2851581.2892318}, doi = {10.1145/2851581.2892318}, acmid = {2892318}, publisher = {ACM}, address = {New York, NY, USA}, keywords = {behavior change technology, e-cigarettes, habits, health, prototype, sensors, tracking, vapetracker, vaping}, }

-

MagiThings

Security, usability, music composition, gaming, 3D gestures, magnets

MagiThings

2013-2014

As part of an internship at Telekom Innovation Labs (T-Labs) in Berlin, Germany, I designed and executed (under supervision of Dr. Hamed Ketabdar) 3 controlled user studies (under the MagiThings project) using the Around Device Interaction (ADI) paradigm to investigate a) the usability and security of magnet-based air signature authentication methods for usable and secure smartphone access b) playful music composition and gaming.

- El Ali, A. & Ketabdar, H. (2015). Investigating Handedness in Air Signatures for Magnetic 3D Gestural User Authentication. In Proc. MobileHCI '15 Adjunct. Copenhagen, Denmark. pdf doi

- El Ali, A. & Ketabdar, H. (2013). Magnet-based Around Device Interaction for Playful Music Composition and Gaming. To be published in International Journal of Mobile Human Computer Interaction (IJMHCI). Preprint available: pdf doi

-

CountMeIn

Urban space, gaming, NFC, waiting time, touch interaction, design, development, Android

CountMeIn

2013

In this work, our focus was on improving the waiting time experience in public places (e.g., waiting for the train to come) by increasing collaboration and play amongst friends and strangers. We tested whether an NFC-enabled mobile pervasive game (in allowing physical interaction with a NFC tag display) reaps more social benefits than a touchscreen version.

- Wolbert, M., El Ali, A. & Nack, F. (2014). CountMeIn: Evaluating Social Presence in a Collaborative Pervasive Mobile Game Using NFC and Touchscreen Interaction. In Proc. ACE '14. Madeira, Portugal. pdf doi

- Wolbert, M. & El Ali, A. (2013). Evaluating NFC and Touchscreen Interactions in Collaborative Mobile Pervasive Games. In Proc. MobileHCI '13. Munich, Germany. pdf doi

-

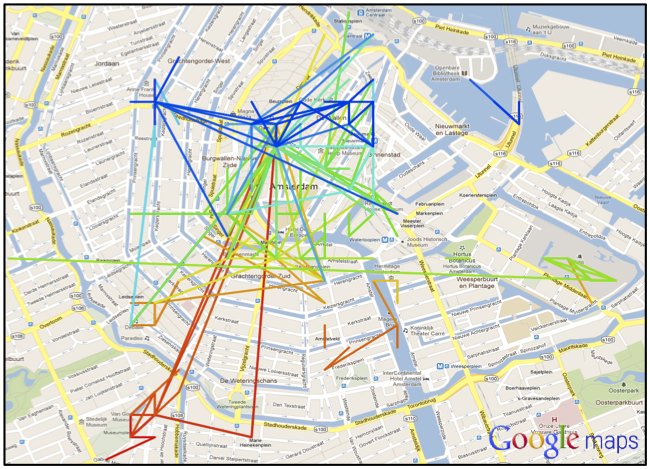

Photographer Paths

Social media mining, Flickr, scenic routes, maps, Amsterdam

Photographer Paths

2012-2013

I conceptualized, designed, evaluated and supervised the technical development of a route recommendation system that makes use of large amounts of geotagged image data (from Flickr) to compute sequence-based non-efficiency driven routes in the city of Amsterdam. The central premise is that pedestrians do not always want to get from point A to point B as quick as possible, but rather would like to explore hidden, more 'local' routes.

-

El Ali, A., van Sas, S. & Nack, F. (2013). Photographer Paths: Sequence Alignment of Geotagged Photos for Exploration-based Route Planning. In proceedings of the 16th ACM Conference on Computer Supported Cooperative Work and Social Computing (CSCW '13), 2013, San Antonio, Texas. pdf doi bib

@inproceedings{ElAli:2013:PPS:2441776.2441888, author = {El Ali, Abdallah and van Sas, Sicco N.A. and Nack, Frank}, title = {Photographer Paths: Sequence Alignment of Geotagged Photos for Exploration-based Route Planning}, booktitle = {Proceedings of the 2013 Conference on Computer Supported Cooperative Work}, series = {CSCW '13}, year = {2013}, isbn = {978-1-4503-1331-5}, location = {San Antonio, Texas, USA}, pages = {985--994}, numpages = {10}, url = {http://doi.acm.org/10.1145/2441776.2441888}, doi = {10.1145/2441776.2441888}, acmid = {2441888}, publisher = {ACM}, address = {New York, NY, USA}, keywords = {exploration-based route planning, geotagged photos, sequence alignment, ugc, urban computing}, }

-

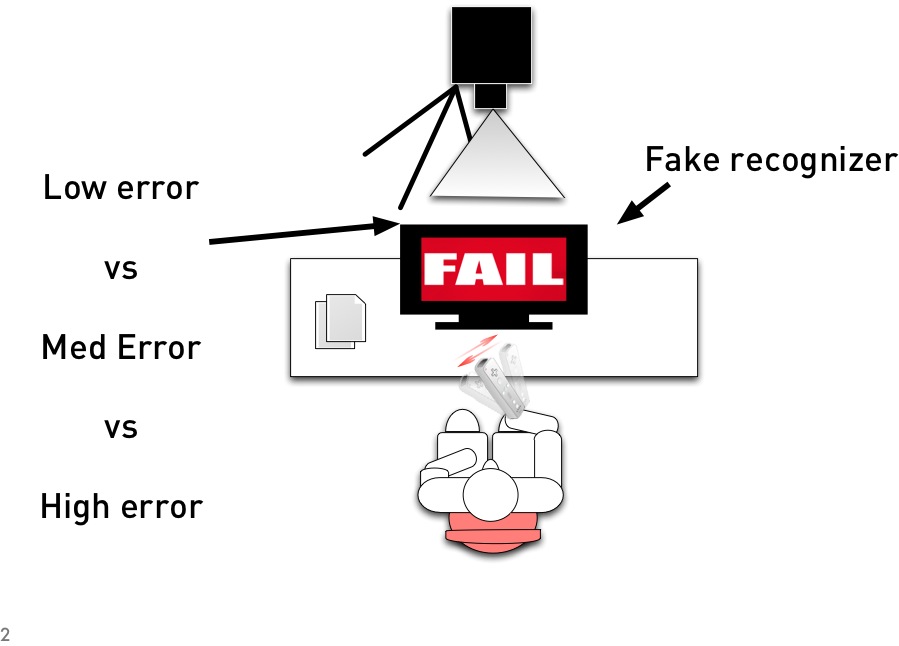

3D Gestures and Errors

Errors, usability, user Experience, 3D gestures, lab study, gesture recognition

3D Gestures and Errors

2011-2012

As part of an internship at Nokia Research Center Tampere, I designed and executed (in collaboration with Nokia Research Center Espoo) a controlled study that investigated the effects of error on the usability and UX of device-based gesture interaction.

-

El Ali, A., Kildal, J. & Lantz, V. (2012). Fishing or a Z?: Investigating the Effects of Error on Mimetic and Alphabet Device-based Gesture Interaction. In Proceedings of the 14th international conference on Multimodal Interaction (ICMI '12), 2012, Santa Monica, California. [Best student paper award] pdf doi bib

@inproceedings{ElAli:2012:FZI:2388676.2388701, author = {El Ali, Abdallah and Kildal, Johan and Lantz, Vuokko}, title = {Fishing or a Z?: Investigating the Effects of Error on Mimetic and Alphabet Device-based Gesture Interaction}, booktitle = {Proceedings of the 14th ACM International Conference on Multimodal Interaction}, series = {ICMI '12}, year = {2012}, isbn = {978-1-4503-1467-1}, location = {Santa Monica, California, USA}, pages = {93--100}, numpages = {8}, url = {http://doi.acm.org/10.1145/2388676.2388701}, doi = {10.1145/2388676.2388701}, acmid = {2388701}, publisher = {ACM}, address = {New York, NY, USA}, keywords = {alphabet gestures, device-based gesture interaction, errors, mimetic gestures, usability, workload}, }

-

Graffiquity

Location-based, multimedia messaging, urban space, user behavior, diary study, longitudinal

Graffiquity

2009-2010

As part of work under the MOCATOUR (Mobile Cultural Access for Tourists) project (part of Amsterdam Living Lab), I designed and executed a user study to investigate what factors are important when people create location-aware multimedia messages. Using the Graffiquity prototype as a probe, I ran a 2-week study using a paper-diary method to study this messaging behavior. This involved some Android interface development for the Graffiquity prototype, as well as designing low-fidelity diaries to gather longitudinal qualitative user data.

- El Ali, A. , Nack, F. & Hardman, L. (2011). Good Times?! 3 Problems and Design Considerations for Playful HCI. In International Journal of Mobile Human Computer Interaction (IJMHCI), 3, 3, p.50-65. pdf doi

- El Ali, A., Nack, F. & Hardman, L. (2010). Understanding contextual factors in location-aware multimedia messaging. In Proceedings of the 12th international conference on Multimodal Interfaces (ICMI-MLMI '10), 2010, Beijing, China. pdf doi